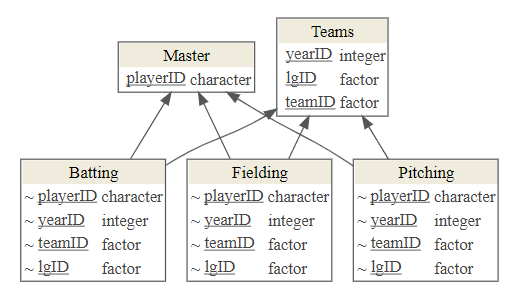

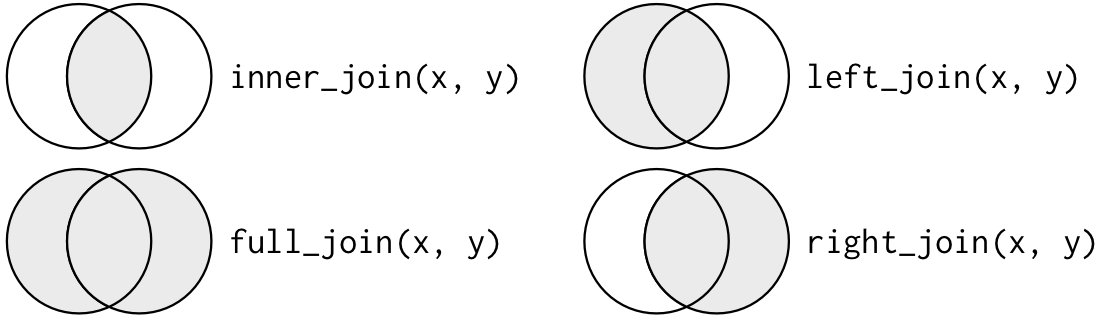

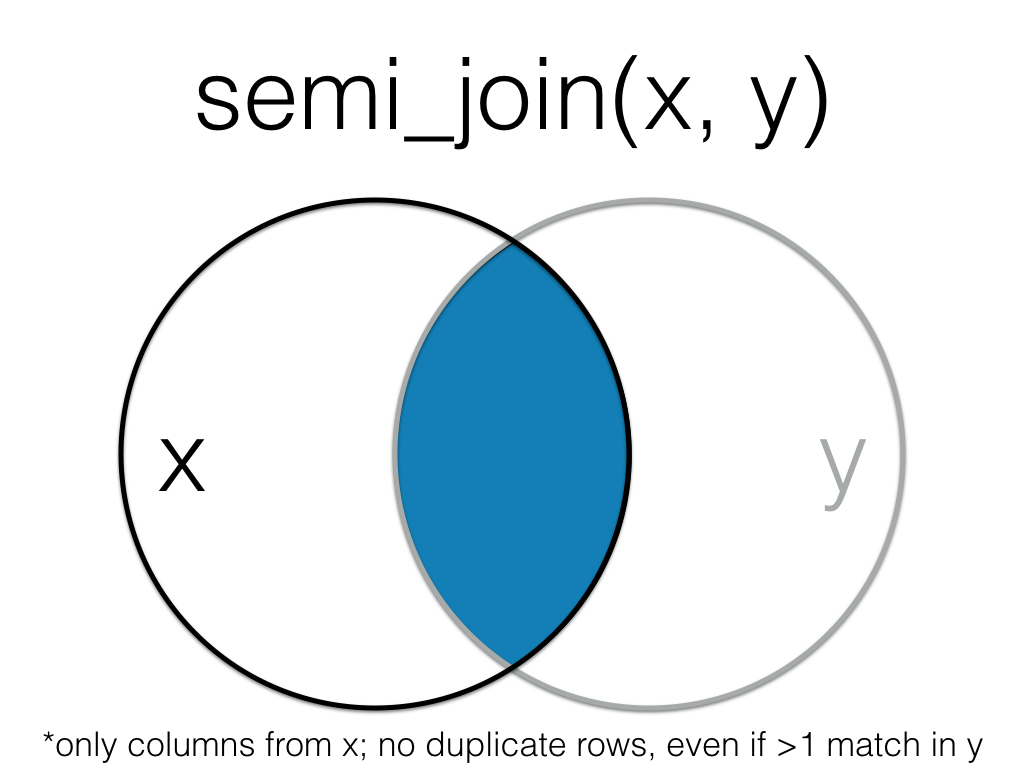

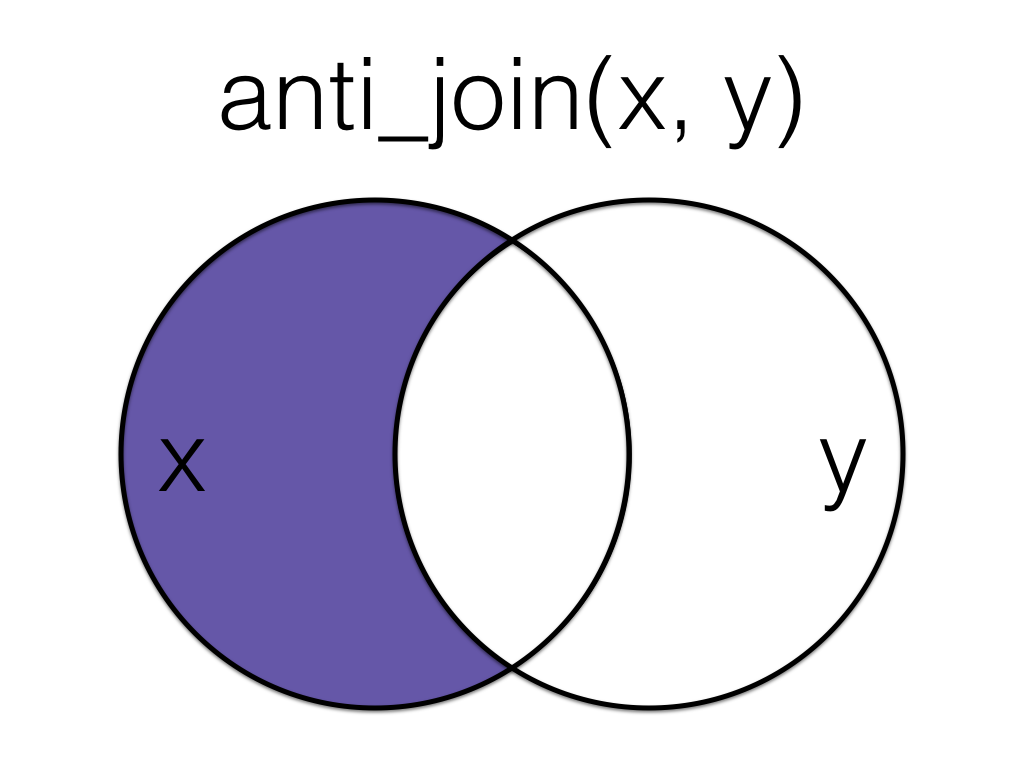

class: title-slide, left, top background-image: url(img/sam-balye-k5RD4dl8Y1o-unsplash_blue.jpg) background-position: 75% 75% background-size: cover # C-Path R Training ### Advanced Data Wrangling Part III **Kelsey Gonzalez**<br> May 28, 2021 — Day 3 --- name: about-me layout: false class: about-me-slide, inverse, middle, center # About me <img src="https://kelseygonzalez.github.io/author/kelsey-e.-gonzalez/avatar.png" class="rounded"/> ## Kelsey Gonzalez .fade[University of Arizona<br>IBM] [<svg viewBox="0 0 512 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M326.612 185.391c59.747 59.809 58.927 155.698.36 214.59-.11.12-.24.25-.36.37l-67.2 67.2c-59.27 59.27-155.699 59.262-214.96 0-59.27-59.26-59.27-155.7 0-214.96l37.106-37.106c9.84-9.84 26.786-3.3 27.294 10.606.648 17.722 3.826 35.527 9.69 52.721 1.986 5.822.567 12.262-3.783 16.612l-13.087 13.087c-28.026 28.026-28.905 73.66-1.155 101.96 28.024 28.579 74.086 28.749 102.325.51l67.2-67.19c28.191-28.191 28.073-73.757 0-101.83-3.701-3.694-7.429-6.564-10.341-8.569a16.037 16.037 0 0 1-6.947-12.606c-.396-10.567 3.348-21.456 11.698-29.806l21.054-21.055c5.521-5.521 14.182-6.199 20.584-1.731a152.482 152.482 0 0 1 20.522 17.197zM467.547 44.449c-59.261-59.262-155.69-59.27-214.96 0l-67.2 67.2c-.12.12-.25.25-.36.37-58.566 58.892-59.387 154.781.36 214.59a152.454 152.454 0 0 0 20.521 17.196c6.402 4.468 15.064 3.789 20.584-1.731l21.054-21.055c8.35-8.35 12.094-19.239 11.698-29.806a16.037 16.037 0 0 0-6.947-12.606c-2.912-2.005-6.64-4.875-10.341-8.569-28.073-28.073-28.191-73.639 0-101.83l67.2-67.19c28.239-28.239 74.3-28.069 102.325.51 27.75 28.3 26.872 73.934-1.155 101.96l-13.087 13.087c-4.35 4.35-5.769 10.79-3.783 16.612 5.864 17.194 9.042 34.999 9.69 52.721.509 13.906 17.454 20.446 27.294 10.606l37.106-37.106c59.271-59.259 59.271-155.699.001-214.959z"></path></svg> kelseygonzalez.github.io](https://kelseygonzalez.github.io/) [<svg viewBox="0 0 512 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M459.37 151.716c.325 4.548.325 9.097.325 13.645 0 138.72-105.583 298.558-298.558 298.558-59.452 0-114.68-17.219-161.137-47.106 8.447.974 16.568 1.299 25.34 1.299 49.055 0 94.213-16.568 130.274-44.832-46.132-.975-84.792-31.188-98.112-72.772 6.498.974 12.995 1.624 19.818 1.624 9.421 0 18.843-1.3 27.614-3.573-48.081-9.747-84.143-51.98-84.143-102.985v-1.299c13.969 7.797 30.214 12.67 47.431 13.319-28.264-18.843-46.781-51.005-46.781-87.391 0-19.492 5.197-37.36 14.294-52.954 51.655 63.675 129.3 105.258 216.365 109.807-1.624-7.797-2.599-15.918-2.599-24.04 0-57.828 46.782-104.934 104.934-104.934 30.213 0 57.502 12.67 76.67 33.137 23.715-4.548 46.456-13.32 66.599-25.34-7.798 24.366-24.366 44.833-46.132 57.827 21.117-2.273 41.584-8.122 60.426-16.243-14.292 20.791-32.161 39.308-52.628 54.253z"></path></svg> @KelseyEGonzalez](https://twitter.com/kelseyegonzalez) [<svg viewBox="0 0 496 512" style="position:relative;display:inline-block;top:.1em;height:1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M165.9 397.4c0 2-2.3 3.6-5.2 3.6-3.3.3-5.6-1.3-5.6-3.6 0-2 2.3-3.6 5.2-3.6 3-.3 5.6 1.3 5.6 3.6zm-31.1-4.5c-.7 2 1.3 4.3 4.3 4.9 2.6 1 5.6 0 6.2-2s-1.3-4.3-4.3-5.2c-2.6-.7-5.5.3-6.2 2.3zm44.2-1.7c-2.9.7-4.9 2.6-4.6 4.9.3 2 2.9 3.3 5.9 2.6 2.9-.7 4.9-2.6 4.6-4.6-.3-1.9-3-3.2-5.9-2.9zM244.8 8C106.1 8 0 113.3 0 252c0 110.9 69.8 205.8 169.5 239.2 12.8 2.3 17.3-5.6 17.3-12.1 0-6.2-.3-40.4-.3-61.4 0 0-70 15-84.7-29.8 0 0-11.4-29.1-27.8-36.6 0 0-22.9-15.7 1.6-15.4 0 0 24.9 2 38.6 25.8 21.9 38.6 58.6 27.5 72.9 20.9 2.3-16 8.8-27.1 16-33.7-55.9-6.2-112.3-14.3-112.3-110.5 0-27.5 7.6-41.3 23.6-58.9-2.6-6.5-11.1-33.3 2.6-67.9 20.9-6.5 69 27 69 27 20-5.6 41.5-8.5 62.8-8.5s42.8 2.9 62.8 8.5c0 0 48.1-33.6 69-27 13.7 34.7 5.2 61.4 2.6 67.9 16 17.7 25.8 31.5 25.8 58.9 0 96.5-58.9 104.2-114.8 110.5 9.2 7.9 17 22.9 17 46.4 0 33.7-.3 75.4-.3 83.6 0 6.5 4.6 14.4 17.3 12.1C428.2 457.8 496 362.9 496 252 496 113.3 383.5 8 244.8 8zM97.2 352.9c-1.3 1-1 3.3.7 5.2 1.6 1.6 3.9 2.3 5.2 1 1.3-1 1-3.3-.7-5.2-1.6-1.6-3.9-2.3-5.2-1zm-10.8-8.1c-.7 1.3.3 2.9 2.3 3.9 1.6 1 3.6.7 4.3-.7.7-1.3-.3-2.9-2.3-3.9-2-.6-3.6-.3-4.3.7zm32.4 35.6c-1.6 1.3-1 4.3 1.3 6.2 2.3 2.3 5.2 2.6 6.5 1 1.3-1.3.7-4.3-1.3-6.2-2.2-2.3-5.2-2.6-6.5-1zm-11.4-14.7c-1.6 1-1.6 3.6 0 5.9 1.6 2.3 4.3 3.3 5.6 2.3 1.6-1.3 1.6-3.9 0-6.2-1.4-2.3-4-3.3-5.6-2z"></path></svg> @KelseyGonzalez](https://github.com/KelseyGonzalez) --- layout: true <!-- <a class="footer-link" href="http://bit.ly/cpath-wrangling">http://bit.ly/cpath-wrangling — Kelsey Gonzalez</a> --> <!-- this adds the link footer to all slides, depends on footer-link class in css--> --- class: left # About you -- .pull-left-narrow[.center[ <img src="https://raw.githubusercontent.com/rstudio/hex-stickers/master/PNG/tidyverse.png" width="25%"/>]] .pull-right-wide[### You're pretty good at data wrangling & special variable types ] -- .pull-left-narrow[ .center[<svg viewBox="0 0 512 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M224 96l16-32 32-16-32-16-16-32-16 32-32 16 32 16 16 32zM80 160l26.66-53.33L160 80l-53.34-26.67L80 0 53.34 53.33 0 80l53.34 26.67L80 160zm352 128l-26.66 53.33L352 368l53.34 26.67L432 448l26.66-53.33L512 368l-53.34-26.67L432 288zm70.62-193.77L417.77 9.38C411.53 3.12 403.34 0 395.15 0c-8.19 0-16.38 3.12-22.63 9.38L9.38 372.52c-12.5 12.5-12.5 32.76 0 45.25l84.85 84.85c6.25 6.25 14.44 9.37 22.62 9.37 8.19 0 16.38-3.12 22.63-9.37l363.14-363.15c12.5-12.48 12.5-32.75 0-45.24zM359.45 203.46l-50.91-50.91 86.6-86.6 50.91 50.91-86.6 86.6z"></path></svg>]] .pull-right-wide[### You're a great data wrangler] -- .pull-left-narrow[ .center[<svg viewBox="0 0 512 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M223.75 130.75L154.62 15.54A31.997 31.997 0 0 0 127.18 0H16.03C3.08 0-4.5 14.57 2.92 25.18l111.27 158.96c29.72-27.77 67.52-46.83 109.56-53.39zM495.97 0H384.82c-11.24 0-21.66 5.9-27.44 15.54l-69.13 115.21c42.04 6.56 79.84 25.62 109.56 53.38L509.08 25.18C516.5 14.57 508.92 0 495.97 0zM256 160c-97.2 0-176 78.8-176 176s78.8 176 176 176 176-78.8 176-176-78.8-176-176-176zm92.52 157.26l-37.93 36.96 8.97 52.22c1.6 9.36-8.26 16.51-16.65 12.09L256 393.88l-46.9 24.65c-8.4 4.45-18.25-2.74-16.65-12.09l8.97-52.22-37.93-36.96c-6.82-6.64-3.05-18.23 6.35-19.59l52.43-7.64 23.43-47.52c2.11-4.28 6.19-6.39 10.28-6.39 4.11 0 8.22 2.14 10.33 6.39l23.43 47.52 52.43 7.64c9.4 1.36 13.17 12.95 6.35 19.59z"></path></svg>]] .pull-right-wide[### .my-gold[You want to master some advanced logics ]] --- # Learning Objectives - Describe relational data. - Use the correct R tidyverse function to manipulate data: + `inner_join()`, `left_join()`, `right_join()`, `full_join()`, + `semi_join()`, `anti_join()` - Apply additional dplyr 1.0 functions to manipulate data and data frames for analysis + `distinct()` + `across()` + `rowwise()` - Apply techniques of iteration to reduce and simplify your code. --- name: question class: inverse, middle, center <svg viewBox="0 0 448 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M448 73.143v45.714C448 159.143 347.667 192 224 192S0 159.143 0 118.857V73.143C0 32.857 100.333 0 224 0s224 32.857 224 73.143zM448 176v102.857C448 319.143 347.667 352 224 352S0 319.143 0 278.857V176c48.125 33.143 136.208 48.572 224 48.572S399.874 209.143 448 176zm0 160v102.857C448 479.143 347.667 512 224 512S0 479.143 0 438.857V336c48.125 33.143 136.208 48.572 224 48.572S399.874 369.143 448 336z"></path></svg> # What can I do to connect two tables together? --- # Relational Data ---- Many datasets (especially those drawn from relational databases) have more than two data frames. These data frames are often *logically related* where rows in one data frame correspond to, or, *have a relation to*, rows in another data frame. --- # The Lahman Baseball Dataset <img src="img/lahman.png" class="title-hex"> ```r install.packages("Lahman") library(Lahman) ``` Use the `data(package = "package-name")` to see the data sets in a package ```r data(package = "Lahman") ``` --- name: image class: middle, center # The Lahman Baseball Dataset <img src="img/lahman.png" class="title-hex">  --- # Keys in Relational Databases <img src="img/lahman.png" class="title-hex"> Every Table should have a **Primary key** to uniquely identify (differentiate) its rows. + Keys must be unique in their own table, i,e., only refer to one instance of an item. + Good Data engineers create keys with no intrinsic meaning other than being a unique identifier. + Some tables may use a *combined key* based on multiple columns, e.g., year, month, day together. + The primary key from one table may appear many times in other tables. --- # Keys in Relational Databases <img src="img/pipe.png" class="title-hex"><img src="img/lahman.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> *Example*: `People$playerID` is a primary key for `People` because it uniquely identifies the rows in `People`. .small[To check if you have identified the Primary Key fields, use `count(primary_key_fields)` and `filter(n > 1)` to see if there are multiple rows for any group. If any group has more than one row, the fields are insufficient to serve as a primary key] .pull-left[ Last name is *not* a Primary Key ```r People %>% count(nameLast) %>% filter(n > 1) ## # A tibble: 2,590 x 2 ## nameLast n ## <chr> <int> ## 1 Aaron 2 ## 2 Abad 2 ## 3 Abbey 2 ## 4 Abbott 9 ## 5 Abercrombie 2 ## 6 Abernathy 4 ## 7 Abrams 2 ## 8 Abreu 8 ## 9 Acevedo 2 ## 10 Acker 2 ## # ... with 2,580 more rows ``` ] .pull-left[ PlayerID is a primary key ```r People %>% count(playerID) %>% filter(n > 1) ## # A tibble: 0 x 2 ## # ... with 2 variables: playerID <chr>, n <int> ``` ] --- # Joins <img src="img/dplyr.png" class="title-hex"> - Getting data from two (or more) tables requires using the primary keys and foreign keys to logically connect the tables. - These "connections" are called **joins** - The dplyr package has functions to connect or join tables so you can work with their data - The dplyr package supports seven types of joins: + Four types of **mutating joins**, + Two types of **filtering joins**, and --- name: image class: middle, center # Join Types <img src="img/dplyr.png" class="title-hex">  --- # Inner Join<img src="img/dplyr.png" class="title-hex"> .pull-left[ - Returns *all rows from x where there are matching values in y*, and *all columns from x and y*. - If there are multiple matches between x and y, all combination of the matches are returned. - Rows that do not match are not returned] .pull-right[  ] --- # Full Join<img src="img/dplyr.png" class="title-hex"> .pull-left[ - returns *all rows and all columns from both x and y*. - Where there are not matching values, returns `NA` for the missing values. ] .pull-right[  ] --- # Left Join<img src="img/dplyr.png" class="title-hex"> .pull-left[ - Returns *all rows from x*, and *all columns from x and y*. - Rows in x with no match in y are returned but will have `NA` values in the new columns. - If there are multiple matches between `x` and `y`, all combinations of the matches are returned. ] .pull-right[  ] --- # Right Join<img src="img/dplyr.png" class="title-hex"> .pull-left[ - Returns *all rows from y*, and *all columns from x and y*. - Rows in `y` with no match in `x` will have `NA` values in the new columns. - If there are multiple matches between `x` and `y`, all combinations of the matches are returned. ] .pull-right[  ] --- name: live-coding background-color: var(--my-yellow) class: middle, center <svg viewBox="0 0 640 512" style="position:relative;display:inline-block;top:.1em;height:3em;color:#122140;" xmlns="http://www.w3.org/2000/svg"> <path d="M278.9 511.5l-61-17.7c-6.4-1.8-10-8.5-8.2-14.9L346.2 8.7c1.8-6.4 8.5-10 14.9-8.2l61 17.7c6.4 1.8 10 8.5 8.2 14.9L293.8 503.3c-1.9 6.4-8.5 10.1-14.9 8.2zm-114-112.2l43.5-46.4c4.6-4.9 4.3-12.7-.8-17.2L117 256l90.6-79.7c5.1-4.5 5.5-12.3.8-17.2l-43.5-46.4c-4.5-4.8-12.1-5.1-17-.5L3.8 247.2c-5.1 4.7-5.1 12.8 0 17.5l144.1 135.1c4.9 4.6 12.5 4.4 17-.5zm327.2.6l144.1-135.1c5.1-4.7 5.1-12.8 0-17.5L492.1 112.1c-4.8-4.5-12.4-4.3-17 .5L431.6 159c-4.6 4.9-4.3 12.7.8 17.2L523 256l-90.6 79.7c-5.1 4.5-5.5 12.3-.8 17.2l43.5 46.4c4.5 4.9 12.1 5.1 17 .6z"></path></svg><br> # Let's try it live together --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Let's practice inner and outer joins! ---- - Select all batting stats for players who were born in the 1980s. - Add the team name to the `Batting` data frame. - list the first name, last name, and team name for every player who played in 2018 ] <div class="countdown blink-colon noupdate-15" id="timer_60b09bd0" style="bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">04</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- # Filtering Joins<img src="img/dplyr.png" class="title-hex"> Filtering Joins Filter Rows in the Left (`x`) Data Frame and _don't add any new columns_ You can think of these as ways to filter your dataset based on values in another dataset. .pull-left[  ] .pull-right[  ] --- # semi_join<img src="img/dplyr.png" class="title-hex"> .pull-left[ - Returns all rows from x where there *are* matching key values in y, keeping *just columns from x*. - Filters out rows in `x` that do *not* match anything in `y`. ] .pull-right[  ] --- # anti_join<img src="img/dplyr.png" class="title-hex"> .pull-left[ - Returns all rows from x where there are *not* matching key values in y, keeping *just columns from x*. - Filters out rows in x that do match anything in y (the rows with no join). ] .pull-right[  ] --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Let's practice joins on NHANES! ---- ```r demographics <- read_csv("http://bit.ly/nhanes-demo") drug_history <- read_csv("http://bit.ly/nhanes-drugs") health_measu <- read_csv("http://bit.ly/nhanes-health") ``` Load the above datasets. Using your newly acquired joining skills, piece the dataset back together (for the most part). What type of join is most appropriate here? ] <div class="countdown blink-colon noupdate-15" id="timer_60b09c06" style="bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- name: break background-color: var(--my-yellow) class: middle, center <svg viewBox="0 0 448 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M144 479H48c-26.5 0-48-21.5-48-48V79c0-26.5 21.5-48 48-48h96c26.5 0 48 21.5 48 48v352c0 26.5-21.5 48-48 48zm304-48V79c0-26.5-21.5-48-48-48h-96c-26.5 0-48 21.5-48 48v352c0 26.5 21.5 48 48 48h96c26.5 0 48-21.5 48-48z"></path></svg> # Break <div class="countdown" id="timer_60b09a17" style="right:0;bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- name: question class: inverse, middle, center <svg viewBox="0 0 448 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M433.941 65.941l-51.882-51.882A48 48 0 0 0 348.118 0H176c-26.51 0-48 21.49-48 48v48H48c-26.51 0-48 21.49-48 48v320c0 26.51 21.49 48 48 48h224c26.51 0 48-21.49 48-48v-48h80c26.51 0 48-21.49 48-48V99.882a48 48 0 0 0-14.059-33.941zM266 464H54a6 6 0 0 1-6-6V150a6 6 0 0 1 6-6h74v224c0 26.51 21.49 48 48 48h96v42a6 6 0 0 1-6 6zm128-96H182a6 6 0 0 1-6-6V54a6 6 0 0 1 6-6h106v88c0 13.255 10.745 24 24 24h88v202a6 6 0 0 1-6 6zm6-256h-64V48h9.632c1.591 0 3.117.632 4.243 1.757l48.368 48.368a6 6 0 0 1 1.757 4.243V112z"></path></svg> # Some advanced row reduction techniques --- # distinct<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> - We used `distinct()` earlier to remove duplicate entries in a group by grouping. - `distinct()` subsets only unique/distinct rows from a data frame. - Rows are a subset of the input but appear in the same order. ```r nhanes %>% distinct(BMI_WHO) ## # A tibble: 5 x 1 ## BMI_WHO ## <fct> ## 1 30.0_plus ## 2 12.0_18.5 ## 3 18.5_to_24.9 ## 4 <NA> ## 5 25.0_to_29.9 ``` --- # distinct<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> ```r nhanes %>% distinct(BMI_WHO, Gender) ## # A tibble: 10 x 2 ## Gender BMI_WHO ## <fct> <fct> ## 1 male 30.0_plus ## 2 male 12.0_18.5 ## 3 male 18.5_to_24.9 ## 4 female 30.0_plus ## 5 female <NA> ## 6 male 25.0_to_29.9 ## 7 male <NA> ## 8 female 25.0_to_29.9 ## 9 female 18.5_to_24.9 ## 10 female 12.0_18.5 #Very similar to count()... nhanes %>% count(BMI_WHO, Gender) ## # A tibble: 10 x 3 ## BMI_WHO Gender n ## <fct> <fct> <int> ## 1 12.0_18.5 female 1765 ## 2 12.0_18.5 male 1876 ## 3 18.5_to_24.9 female 2742 ## 4 18.5_to_24.9 male 2612 ## 5 25.0_to_29.9 female 1970 ## 6 25.0_to_29.9 male 2417 ## 7 30.0_plus female 2526 ## 8 30.0_plus male 2039 ## 9 <NA> female 1209 ## 10 <NA> male 1137 ``` --- # slice<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> Sometimes when we're working with a dataframe, we want to select a random subselection of the rows or select rows that have a extreme value of a column. The `slice` functions allow us to do this. ---- `slice_sample()` randomly selects rows. .panelset[ .panel[.panel-name[n] ```r nhanes %>% slice_sample(n = 6) ## # A tibble: 6 x 78 ## ID SurveyYr Gender Age AgeMonths Race1 Race3 Education MaritalStatus ## <int> <fct> <fct> <int> <int> <fct> <fct> <fct> <fct> ## 1 53648 2009_10 male 11 137 Black <NA> <NA> <NA> ## 2 57668 2009_10 female 31 377 White <NA> Some Colle~ Divorced ## 3 55151 2009_10 male 11 140 White <NA> <NA> <NA> ## 4 59555 2009_10 female 75 902 Other <NA> 9 - 11th G~ Widowed ## 5 70422 2011_12 female 9 NA Hispan~ Hispa~ <NA> <NA> ## 6 57486 2009_10 female 1 14 Mexican <NA> <NA> <NA> ## # ... with 69 more variables: HHIncome <fct>, HHIncomeMid <int>, Poverty <dbl>, ## # HomeRooms <int>, HomeOwn <fct>, Work <fct>, Weight <dbl>, Length <dbl>, ## # HeadCirc <dbl>, Height <dbl>, BMI <dbl>, BMICatUnder20yrs <fct>, ## # BMI_WHO <fct>, Pulse <int>, BPSysAve <int>, BPDiaAve <int>, BPSys1 <int>, ## # BPDia1 <int>, BPSys2 <int>, BPDia2 <int>, BPSys3 <int>, BPDia3 <int>, ## # Testosterone <dbl>, DirectChol <dbl>, TotChol <dbl>, UrineVol1 <int>, ## # UrineFlow1 <dbl>, UrineVol2 <int>, UrineFlow2 <dbl>, Diabetes <fct>, ## # DiabetesAge <int>, HealthGen <fct>, DaysPhysHlthBad <int>, ## # DaysMentHlthBad <int>, LittleInterest <fct>, Depressed <fct>, ## # nPregnancies <int>, nBabies <int>, Age1stBaby <int>, SleepHrsNight <int>, ## # SleepTrouble <fct>, PhysActive <fct>, PhysActiveDays <int>, TVHrsDay <fct>, ## # CompHrsDay <fct>, TVHrsDayChild <int>, CompHrsDayChild <int>, ## # Alcohol12PlusYr <fct>, AlcoholDay <int>, AlcoholYear <int>, SmokeNow <fct>, ## # Smoke100 <fct>, SmokeAge <int>, Marijuana <fct>, AgeFirstMarij <int>, ## # RegularMarij <fct>, AgeRegMarij <int>, HardDrugs <fct>, SexEver <fct>, ## # SexAge <int>, SexNumPartnLife <int>, SexNumPartYear <int>, SameSex <fct>, ## # SexOrientation <fct>, WTINT2YR <dbl>, WTMEC2YR <dbl>, SDMVPSU <int>, ## # SDMVSTRA <int>, PregnantNow <fct> ``` ] .panel[.panel-name[prop] ```r nhanes %>% slice_sample(prop = 0.005) ## # A tibble: 101 x 78 ## ID SurveyYr Gender Age AgeMonths Race1 Race3 Education MaritalStatus ## <int> <fct> <fct> <int> <int> <fct> <fct> <fct> <fct> ## 1 53110 2009_10 female 55 671 Other <NA> Some Colle~ Divorced ## 2 55930 2009_10 male 2 26 Mexican <NA> <NA> <NA> ## 3 68749 2011_12 female 21 NA Black Black High School NeverMarried ## 4 52961 2009_10 female 73 876 White <NA> Some Colle~ Widowed ## 5 57181 2009_10 male 62 745 White <NA> 9 - 11th G~ Married ## 6 68370 2011_12 female 10 NA White White <NA> <NA> ## 7 56540 2009_10 female 1 19 White <NA> <NA> <NA> ## 8 68114 2011_12 female 16 NA White White <NA> <NA> ## 9 61208 2009_10 female 44 537 White <NA> High School Married ## 10 63924 2011_12 female 29 NA Black Black High School Separated ## # ... with 91 more rows, and 69 more variables: HHIncome <fct>, ## # HHIncomeMid <int>, Poverty <dbl>, HomeRooms <int>, HomeOwn <fct>, ## # Work <fct>, Weight <dbl>, Length <dbl>, HeadCirc <dbl>, Height <dbl>, ## # BMI <dbl>, BMICatUnder20yrs <fct>, BMI_WHO <fct>, Pulse <int>, ## # BPSysAve <int>, BPDiaAve <int>, BPSys1 <int>, BPDia1 <int>, BPSys2 <int>, ## # BPDia2 <int>, BPSys3 <int>, BPDia3 <int>, Testosterone <dbl>, ## # DirectChol <dbl>, TotChol <dbl>, UrineVol1 <int>, UrineFlow1 <dbl>, ## # UrineVol2 <int>, UrineFlow2 <dbl>, Diabetes <fct>, DiabetesAge <int>, ## # HealthGen <fct>, DaysPhysHlthBad <int>, DaysMentHlthBad <int>, ## # LittleInterest <fct>, Depressed <fct>, nPregnancies <int>, nBabies <int>, ## # Age1stBaby <int>, SleepHrsNight <int>, SleepTrouble <fct>, ## # PhysActive <fct>, PhysActiveDays <int>, TVHrsDay <fct>, CompHrsDay <fct>, ## # TVHrsDayChild <int>, CompHrsDayChild <int>, Alcohol12PlusYr <fct>, ## # AlcoholDay <int>, AlcoholYear <int>, SmokeNow <fct>, Smoke100 <fct>, ## # SmokeAge <int>, Marijuana <fct>, AgeFirstMarij <int>, RegularMarij <fct>, ## # AgeRegMarij <int>, HardDrugs <fct>, SexEver <fct>, SexAge <int>, ## # SexNumPartnLife <int>, SexNumPartYear <int>, SameSex <fct>, ## # SexOrientation <fct>, WTINT2YR <dbl>, WTMEC2YR <dbl>, SDMVPSU <int>, ## # SDMVSTRA <int>, PregnantNow <fct> ``` ] ] --- <img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> ## `slice_min()` and `slice_max()` select rows with highest or lowest values of a variable. .panelset[ .panel[.panel-name[max] ```r nhanes %>% slice_max(Education) ## # A tibble: 2,656 x 78 ## ID SurveyYr Gender Age AgeMonths Race1 Race3 Education MaritalStatus ## <int> <fct> <fct> <int> <int> <fct> <fct> <fct> <fct> ## 1 51647 2009_10 female 45 541 White <NA> College Gr~ Married ## 2 51656 2009_10 male 58 707 White <NA> College Gr~ Divorced ## 3 51664 2009_10 female 53 644 White <NA> College Gr~ Married ## 4 51670 2009_10 male 22 269 White <NA> College Gr~ NeverMarried ## 5 51685 2009_10 female 56 677 White <NA> College Gr~ Married ## 6 51687 2009_10 male 78 937 White <NA> College Gr~ Married ## 7 51692 2009_10 male 54 655 Hispan~ <NA> College Gr~ Divorced ## 8 51696 2009_10 male 54 657 Black <NA> College Gr~ Widowed ## 9 51710 2009_10 female 26 319 White <NA> College Gr~ Married ## 10 51718 2009_10 female 51 614 Mexican <NA> College Gr~ Married ## # ... with 2,646 more rows, and 69 more variables: HHIncome <fct>, ## # HHIncomeMid <int>, Poverty <dbl>, HomeRooms <int>, HomeOwn <fct>, ## # Work <fct>, Weight <dbl>, Length <dbl>, HeadCirc <dbl>, Height <dbl>, ## # BMI <dbl>, BMICatUnder20yrs <fct>, BMI_WHO <fct>, Pulse <int>, ## # BPSysAve <int>, BPDiaAve <int>, BPSys1 <int>, BPDia1 <int>, BPSys2 <int>, ## # BPDia2 <int>, BPSys3 <int>, BPDia3 <int>, Testosterone <dbl>, ## # DirectChol <dbl>, TotChol <dbl>, UrineVol1 <int>, UrineFlow1 <dbl>, ## # UrineVol2 <int>, UrineFlow2 <dbl>, Diabetes <fct>, DiabetesAge <int>, ## # HealthGen <fct>, DaysPhysHlthBad <int>, DaysMentHlthBad <int>, ## # LittleInterest <fct>, Depressed <fct>, nPregnancies <int>, nBabies <int>, ## # Age1stBaby <int>, SleepHrsNight <int>, SleepTrouble <fct>, ## # PhysActive <fct>, PhysActiveDays <int>, TVHrsDay <fct>, CompHrsDay <fct>, ## # TVHrsDayChild <int>, CompHrsDayChild <int>, Alcohol12PlusYr <fct>, ## # AlcoholDay <int>, AlcoholYear <int>, SmokeNow <fct>, Smoke100 <fct>, ## # SmokeAge <int>, Marijuana <fct>, AgeFirstMarij <int>, RegularMarij <fct>, ## # AgeRegMarij <int>, HardDrugs <fct>, SexEver <fct>, SexAge <int>, ## # SexNumPartnLife <int>, SexNumPartYear <int>, SameSex <fct>, ## # SexOrientation <fct>, WTINT2YR <dbl>, WTMEC2YR <dbl>, SDMVPSU <int>, ## # SDMVSTRA <int>, PregnantNow <fct> ``` ] .panel[.panel-name[min] ```r nhanes %>% slice_min(Age) ## # A tibble: 820 x 78 ## ID SurveyYr Gender Age AgeMonths Race1 Race3 Education MaritalStatus ## <int> <fct> <fct> <int> <int> <fct> <fct> <fct> <fct> ## 1 51644 2009_10 male 0 9 White <NA> <NA> <NA> ## 2 51688 2009_10 male 0 3 White <NA> <NA> <NA> ## 3 51717 2009_10 female 0 4 White <NA> <NA> <NA> ## 4 51786 2009_10 male 0 10 Mexican <NA> <NA> <NA> ## 5 51876 2009_10 female 0 7 Mexican <NA> <NA> <NA> ## 6 51947 2009_10 female 0 10 Mexican <NA> <NA> <NA> ## 7 51962 2009_10 female 0 2 Hispanic <NA> <NA> <NA> ## 8 51988 2009_10 female 0 0 Black <NA> <NA> <NA> ## 9 52001 2009_10 female 0 8 Mexican <NA> <NA> <NA> ## 10 52034 2009_10 male 0 8 Mexican <NA> <NA> <NA> ## # ... with 810 more rows, and 69 more variables: HHIncome <fct>, ## # HHIncomeMid <int>, Poverty <dbl>, HomeRooms <int>, HomeOwn <fct>, ## # Work <fct>, Weight <dbl>, Length <dbl>, HeadCirc <dbl>, Height <dbl>, ## # BMI <dbl>, BMICatUnder20yrs <fct>, BMI_WHO <fct>, Pulse <int>, ## # BPSysAve <int>, BPDiaAve <int>, BPSys1 <int>, BPDia1 <int>, BPSys2 <int>, ## # BPDia2 <int>, BPSys3 <int>, BPDia3 <int>, Testosterone <dbl>, ## # DirectChol <dbl>, TotChol <dbl>, UrineVol1 <int>, UrineFlow1 <dbl>, ## # UrineVol2 <int>, UrineFlow2 <dbl>, Diabetes <fct>, DiabetesAge <int>, ## # HealthGen <fct>, DaysPhysHlthBad <int>, DaysMentHlthBad <int>, ## # LittleInterest <fct>, Depressed <fct>, nPregnancies <int>, nBabies <int>, ## # Age1stBaby <int>, SleepHrsNight <int>, SleepTrouble <fct>, ## # PhysActive <fct>, PhysActiveDays <int>, TVHrsDay <fct>, CompHrsDay <fct>, ## # TVHrsDayChild <int>, CompHrsDayChild <int>, Alcohol12PlusYr <fct>, ## # AlcoholDay <int>, AlcoholYear <int>, SmokeNow <fct>, Smoke100 <fct>, ## # SmokeAge <int>, Marijuana <fct>, AgeFirstMarij <int>, RegularMarij <fct>, ## # AgeRegMarij <int>, HardDrugs <fct>, SexEver <fct>, SexAge <int>, ## # SexNumPartnLife <int>, SexNumPartYear <int>, SameSex <fct>, ## # SexOrientation <fct>, WTINT2YR <dbl>, WTMEC2YR <dbl>, SDMVPSU <int>, ## # SDMVSTRA <int>, PregnantNow <fct> ``` ] ] --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Let's practice row reduction! ---- - Using any technique we've learned, do we have any duplicate rows in `nhanes`? - Find the average `DirectChol` for each `HealthGen` group; Using a slice function, which `HealthGen` level has the highest average `DirectChol`? ] <div class="countdown blink-colon noupdate-15" id="timer_60b09da0" style="bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">02</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- name: question class: inverse, middle, center <svg viewBox="0 0 512 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M500.33 0h-47.41a12 12 0 0 0-12 12.57l4 82.76A247.42 247.42 0 0 0 256 8C119.34 8 7.9 119.53 8 256.19 8.1 393.07 119.1 504 256 504a247.1 247.1 0 0 0 166.18-63.91 12 12 0 0 0 .48-17.43l-34-34a12 12 0 0 0-16.38-.55A176 176 0 1 1 402.1 157.8l-101.53-4.87a12 12 0 0 0-12.57 12v47.41a12 12 0 0 0 12 12h200.33a12 12 0 0 0 12-12V12a12 12 0 0 0-12-12z"></path></svg> # How can I do the same action for many variables? --- # Across<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> ---- .pull-left[ If you want to perform the same operation on multiple columns, copying and pasting could be tedious and error prone: ```r df %>% summarise(a = mean(a), b = mean(b), c = mean(c), d = mean(d)) ``` ] .pull-right[ We can now use the `across()` function to write this kind of operation more succinctly and transparently: ```r df %>% summarise(across(a:d, mean)) ``` ] --- ## syntaxes in across<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> There are two different syntaxes to use in across. .pull-left[ the function then option syntax ```r df %>% summarise(across(a:d, # columns max, # function na.rm = TRUE)) # options for function ``` ] .pull-right[ The Purrr-stlye formula syntax ```r df %>% summarise(across(a:d, # columns ~ max(.x, na.rm = TRUE)) # formula ``` ] --- template: live-coding --- # Across<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> ---- Across can use tidy selection to choose which variables you're like to operate on: .panelset[ .panel[.panel-name[Example 1] ```r # mean of any column that starts with age nhanes %>% summarize(across(starts_with("Age"), mean, na.rm = TRUE)) ## # A tibble: 1 x 5 ## Age AgeMonths Age1stBaby AgeFirstMarij AgeRegMarij ## <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 32.0 352. 21.7 17.0 17.6 ``` ] .panel[.panel-name[Example 2] ```r # how many levels do we have for each factor? nhanes %>% summarise(across(where(is.factor), nlevels)) ## # A tibble: 1 x 29 ## SurveyYr Gender Race1 Race3 Education MaritalStatus HHIncome HomeOwn Work ## <int> <int> <int> <int> <int> <int> <int> <int> <int> ## 1 2 2 5 6 5 6 12 3 3 ## # ... with 20 more variables: BMICatUnder20yrs <int>, BMI_WHO <int>, ## # Diabetes <int>, HealthGen <int>, LittleInterest <int>, Depressed <int>, ## # SleepTrouble <int>, PhysActive <int>, TVHrsDay <int>, CompHrsDay <int>, ## # Alcohol12PlusYr <int>, SmokeNow <int>, Smoke100 <int>, Marijuana <int>, ## # RegularMarij <int>, HardDrugs <int>, SexEver <int>, SameSex <int>, ## # SexOrientation <int>, PregnantNow <int> nhanes %>% summarise(across(where(is.character), ~ length(unique(.x)))) ## # A tibble: 1 x 0 ``` ] ] --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Let's practice across! ---- - For those who _have_ diabetes and those who _don't_, find the mean `Pulse`, `BPDiaAve` and `DirectChol`. - Use a tidy select function inside of across to find the maximum `UrineVol1` and `UrineVol2` ] <div class="countdown blink-colon noupdate-15" id="timer_60b09b2f" style="bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- ## multiple functions in across<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> .pull-left[ It's common that we want to include multiple functions across multiple columns. We could use this syntax: ```r df %>% summarise(across(a:d, mean, na.rm = TRUE), across(a:d, sd, na.rm = TRUE), across(a:d, min, na.rm = TRUE), across(a:d, max, na.rm = TRUE)) ``` ] .pull-right[ But it is easier to include multiple functions in a single across call using a named list: ```r df %>% summarise(across(a:d, # columns list(mean, sd, min, max), # functions na.rm = TRUE) # options ``` ] --- template: live-coding --- ## Controling variable names in across<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> ```r nhanes %>% summarise(across(c(Age,HHIncomeMid), list(mean = mean, median = sd), na.rm = TRUE)) ## # A tibble: 1 x 4 ## Age_mean Age_median HHIncomeMid_mean HHIncomeMid_median ## <dbl> <dbl> <dbl> <dbl> ## 1 32.0 24.8 47386. 32223. ``` When we create a median and mean across multiple variables, you'll notice that the new variable names were always `{name_of_variable}_{function}`. This is the default behavior of across - the name, an underscore, and the name of the function from the named list provided. --- ## Controling variable names in across<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> To customize how things are named, you can use `glue` syntax and the `.names` argument. + `{.fn}` will refer to the name of the function you used + `{.col}` will refer to the name of the column ```r nhanes %>% summarise(across(c(Age,HHIncomeMid), list(mean = mean, median = sd), na.rm = TRUE, .names = "{.fn}_of_{.col}")) ``` --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Let's practice more across! ---- You plan to predict `Diabetes` status with the following independent variables: `Weight`, `Pulse`, `SleepHrsNight`, `PhysActiveDays`, and `AlcoholDay`. - Use summarize and across to find the mean and standard deviation of each of these variables. - You plan to use an algorithm that needs all predictors on the same scale. Using mutate and across, normalize your variables using the `scale()` function. Make the new variable labels `col_normalized`. ] <div class="countdown blink-colon noupdate-15" id="timer_60b09a4d" style="bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">04</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ## Special Across examples:<img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> - Example: Find all rows where no variable has missing values: ```r nhanes %>% filter(across(everything(), ~ !is.na(.x))) ``` - Example: Use across to enable tidy selection inside of count or other variables: ```r nhanes %>% count(across(contains("Smoke")), sort = TRUE) ``` --- template: break --- name: question class: inverse, middle, center <svg viewBox="0 0 512 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M377.941 169.941V216H134.059v-46.059c0-21.382-25.851-32.09-40.971-16.971L7.029 239.029c-9.373 9.373-9.373 24.568 0 33.941l86.059 86.059c15.119 15.119 40.971 4.411 40.971-16.971V296h243.882v46.059c0 21.382 25.851 32.09 40.971 16.971l86.059-86.059c9.373-9.373 9.373-24.568 0-33.941l-86.059-86.059c-15.119-15.12-40.971-4.412-40.971 16.97z"></path></svg> # What if I want to calculate the mean across multiple column for one row? --- # Why do I need rowwise? <img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> Let's try and grab the mean across all height values for each row: ```r fruits %>% mutate(height_mean = mean(c(height_1, height_2, height_3))) ## # A tibble: 3 x 7 ## fruit height_1 height_2 height_3 width weight height_mean ## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 Banana 4 4.2 3.5 1 0.5 7.74 ## 2 Strawberry 1 0.9 1.2 1 0.25 7.74 ## 3 Pineapple 18 17.7 19.2 6 3 7.74 ``` --- # Why do I need rowwise?<img src="img/dplyr.png" class="title-hex"> Rowwise enables us to use functions _within_ **each row** ```r fruits %>% rowwise(fruit) %>% mutate(height_mean = mean(c(height_1, height_2, height_3))) ## # A tibble: 3 x 7 ## # Rowwise: fruit ## fruit height_1 height_2 height_3 width weight height_mean ## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 Banana 4 4.2 3.5 1 0.5 3.9 ## 2 Strawberry 1 0.9 1.2 1 0.25 1.03 ## 3 Pineapple 18 17.7 19.2 6 3 18.3 ``` --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Let's practice using rowwise! ---- Add a new column that calculates the average UrineFlow and UrineVol for each individual. Extra Challenge: Use `pivot_longer`, `group_by`, and `mutate` to get the same result. ] <div class="countdown blink-colon noupdate-15" id="timer_60b09ce5" style="bottom:0;left:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">04</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ## Can I use Across + rowwise? <img src="img/pipe.png" class="title-hex"><img src="img/dplyr.png" class="title-hex"> Sort of. Instead of just across, you can use `c_across()` to succinctly select many variables.`c_across()` uses tidy select helpers. If we want to use our fruits example... ``` ## # A tibble: 3 x 7 ## # Rowwise: fruit ## fruit height_1 height_2 height_3 width weight height_mean ## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 Banana 4 4.2 3.5 1 0.5 3.9 ## 2 Strawberry 1 0.9 1.2 1 0.25 1.03 ## 3 Pineapple 18 17.7 19.2 6 3 18.3 ``` - so c\_across is a _rowwise_ version of the function we learned earlier, across. --- name: question class: inverse, middle, center <svg viewBox="0 0 512 512" style="position:relative;display:inline-block;top:.1em;height:2em;" xmlns="http://www.w3.org/2000/svg"> <path d="M328 256c0 39.8-32.2 72-72 72s-72-32.2-72-72 32.2-72 72-72 72 32.2 72 72zm104-72c-39.8 0-72 32.2-72 72s32.2 72 72 72 72-32.2 72-72-32.2-72-72-72zm-352 0c-39.8 0-72 32.2-72 72s32.2 72 72 72 72-32.2 72-72-32.2-72-72-72z"></path></svg> # What I need to repeat what I'm doing to lots of things that aren't variables --- # Purrr example #1 <img src="img/readr.png" class="title-hex"><img src="img/purrr.png" class="title-hex"><img src="img/glue.png" class="title-hex"> ```r csv_files <- list.files("data") all_files <- map(glue("data/{csv_files}"), read_csv) %>% unnest() ``` --- # Purrr example #2 ```r nhanes %>% group_by(Education) %>% nest() %>% pwalk(function(data, Education) {write_csv(data, glue("data/nhanes_{Education}.csv"))}) ``` --- name: your-turn background-color: var(--my-red) class: inverse .left-column[ ## Your turn<br><svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M402.3 344.9l32-32c5-5 13.7-1.5 13.7 5.7V464c0 26.5-21.5 48-48 48H48c-26.5 0-48-21.5-48-48V112c0-26.5 21.5-48 48-48h273.5c7.1 0 10.7 8.6 5.7 13.7l-32 32c-1.5 1.5-3.5 2.3-5.7 2.3H48v352h352V350.5c0-2.1.8-4.1 2.3-5.6zm156.6-201.8L296.3 405.7l-90.4 10c-26.2 2.9-48.5-19.2-45.6-45.6l10-90.4L432.9 17.1c22.9-22.9 59.9-22.9 82.7 0l43.2 43.2c22.9 22.9 22.9 60 .1 82.8zM460.1 174L402 115.9 216.2 301.8l-7.3 65.3 65.3-7.3L460.1 174zm64.8-79.7l-43.2-43.2c-4.1-4.1-10.8-4.1-14.8 0L436 82l58.1 58.1 30.9-30.9c4-4.2 4-10.8-.1-14.9z"></path></svg><br> ] .right-column[ ### Day 3 Case Study ---- Employ various skills in data wrangling that we have learned on the nhanes dataset. - Find some summary statistics - Create new variables using rowwise - use across to add multiple variables at once - reorder one of the existing factors - Experiment with pivoting to make BPDia1, BPDia2, and BPDia3 tidy ] --- class: goodbye-slide, inverse, middle, left .pull-left[ <img src="https://kelseygonzalez.github.io/author/kelsey-e.-gonzalez/avatar.png" class = "rounded"/> # Thank you! ### Here's where you can find me... .right[ [kelseygonzalez.github.io <svg viewBox="0 0 512 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M326.612 185.391c59.747 59.809 58.927 155.698.36 214.59-.11.12-.24.25-.36.37l-67.2 67.2c-59.27 59.27-155.699 59.262-214.96 0-59.27-59.26-59.27-155.7 0-214.96l37.106-37.106c9.84-9.84 26.786-3.3 27.294 10.606.648 17.722 3.826 35.527 9.69 52.721 1.986 5.822.567 12.262-3.783 16.612l-13.087 13.087c-28.026 28.026-28.905 73.66-1.155 101.96 28.024 28.579 74.086 28.749 102.325.51l67.2-67.19c28.191-28.191 28.073-73.757 0-101.83-3.701-3.694-7.429-6.564-10.341-8.569a16.037 16.037 0 0 1-6.947-12.606c-.396-10.567 3.348-21.456 11.698-29.806l21.054-21.055c5.521-5.521 14.182-6.199 20.584-1.731a152.482 152.482 0 0 1 20.522 17.197zM467.547 44.449c-59.261-59.262-155.69-59.27-214.96 0l-67.2 67.2c-.12.12-.25.25-.36.37-58.566 58.892-59.387 154.781.36 214.59a152.454 152.454 0 0 0 20.521 17.196c6.402 4.468 15.064 3.789 20.584-1.731l21.054-21.055c8.35-8.35 12.094-19.239 11.698-29.806a16.037 16.037 0 0 0-6.947-12.606c-2.912-2.005-6.64-4.875-10.341-8.569-28.073-28.073-28.191-73.639 0-101.83l67.2-67.19c28.239-28.239 74.3-28.069 102.325.51 27.75 28.3 26.872 73.934-1.155 101.96l-13.087 13.087c-4.35 4.35-5.769 10.79-3.783 16.612 5.864 17.194 9.042 34.999 9.69 52.721.509 13.906 17.454 20.446 27.294 10.606l37.106-37.106c59.271-59.259 59.271-155.699.001-214.959z"></path></svg>](https://kelseygonzalez.github.io/)<br/> [@KelseyEGonzalez <svg viewBox="0 0 512 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M459.37 151.716c.325 4.548.325 9.097.325 13.645 0 138.72-105.583 298.558-298.558 298.558-59.452 0-114.68-17.219-161.137-47.106 8.447.974 16.568 1.299 25.34 1.299 49.055 0 94.213-16.568 130.274-44.832-46.132-.975-84.792-31.188-98.112-72.772 6.498.974 12.995 1.624 19.818 1.624 9.421 0 18.843-1.3 27.614-3.573-48.081-9.747-84.143-51.98-84.143-102.985v-1.299c13.969 7.797 30.214 12.67 47.431 13.319-28.264-18.843-46.781-51.005-46.781-87.391 0-19.492 5.197-37.36 14.294-52.954 51.655 63.675 129.3 105.258 216.365 109.807-1.624-7.797-2.599-15.918-2.599-24.04 0-57.828 46.782-104.934 104.934-104.934 30.213 0 57.502 12.67 76.67 33.137 23.715-4.548 46.456-13.32 66.599-25.34-7.798 24.366-24.366 44.833-46.132 57.827 21.117-2.273 41.584-8.122 60.426-16.243-14.292 20.791-32.161 39.308-52.628 54.253z"></path></svg>](https://twitter.com/kelseyegonzalez)<br/> [@KelseyGonzalez <svg viewBox="0 0 496 512" style="position:relative;display:inline-block;top:.1em;height:1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M165.9 397.4c0 2-2.3 3.6-5.2 3.6-3.3.3-5.6-1.3-5.6-3.6 0-2 2.3-3.6 5.2-3.6 3-.3 5.6 1.3 5.6 3.6zm-31.1-4.5c-.7 2 1.3 4.3 4.3 4.9 2.6 1 5.6 0 6.2-2s-1.3-4.3-4.3-5.2c-2.6-.7-5.5.3-6.2 2.3zm44.2-1.7c-2.9.7-4.9 2.6-4.6 4.9.3 2 2.9 3.3 5.9 2.6 2.9-.7 4.9-2.6 4.6-4.6-.3-1.9-3-3.2-5.9-2.9zM244.8 8C106.1 8 0 113.3 0 252c0 110.9 69.8 205.8 169.5 239.2 12.8 2.3 17.3-5.6 17.3-12.1 0-6.2-.3-40.4-.3-61.4 0 0-70 15-84.7-29.8 0 0-11.4-29.1-27.8-36.6 0 0-22.9-15.7 1.6-15.4 0 0 24.9 2 38.6 25.8 21.9 38.6 58.6 27.5 72.9 20.9 2.3-16 8.8-27.1 16-33.7-55.9-6.2-112.3-14.3-112.3-110.5 0-27.5 7.6-41.3 23.6-58.9-2.6-6.5-11.1-33.3 2.6-67.9 20.9-6.5 69 27 69 27 20-5.6 41.5-8.5 62.8-8.5s42.8 2.9 62.8 8.5c0 0 48.1-33.6 69-27 13.7 34.7 5.2 61.4 2.6 67.9 16 17.7 25.8 31.5 25.8 58.9 0 96.5-58.9 104.2-114.8 110.5 9.2 7.9 17 22.9 17 46.4 0 33.7-.3 75.4-.3 83.6 0 6.5 4.6 14.4 17.3 12.1C428.2 457.8 496 362.9 496 252 496 113.3 383.5 8 244.8 8zM97.2 352.9c-1.3 1-1 3.3.7 5.2 1.6 1.6 3.9 2.3 5.2 1 1.3-1 1-3.3-.7-5.2-1.6-1.6-3.9-2.3-5.2-1zm-10.8-8.1c-.7 1.3.3 2.9 2.3 3.9 1.6 1 3.6.7 4.3-.7.7-1.3-.3-2.9-2.3-3.9-2-.6-3.6-.3-4.3.7zm32.4 35.6c-1.6 1.3-1 4.3 1.3 6.2 2.3 2.3 5.2 2.6 6.5 1 1.3-1.3.7-4.3-1.3-6.2-2.2-2.3-5.2-2.6-6.5-1zm-11.4-14.7c-1.6 1-1.6 3.6 0 5.9 1.6 2.3 4.3 3.3 5.6 2.3 1.6-1.3 1.6-3.9 0-6.2-1.4-2.3-4-3.3-5.6-2z"></path></svg>](https://github.com/KelseyGonzalez) ]] --- class: inverse, middle, left ## Acknowledgements: [Slide template](https://spcanelon.github.io/xaringan-basics-and-beyond/) [Lecture structure](https://american-stat-412612.netlify.app/) [xaringan](https://github.com/yihui/xaringan) [xaringanExtra](https://pkg.garrickadenbuie.com/xaringanExtra/#/) [flipbookr](https://github.com/EvaMaeRey/flipbookr)